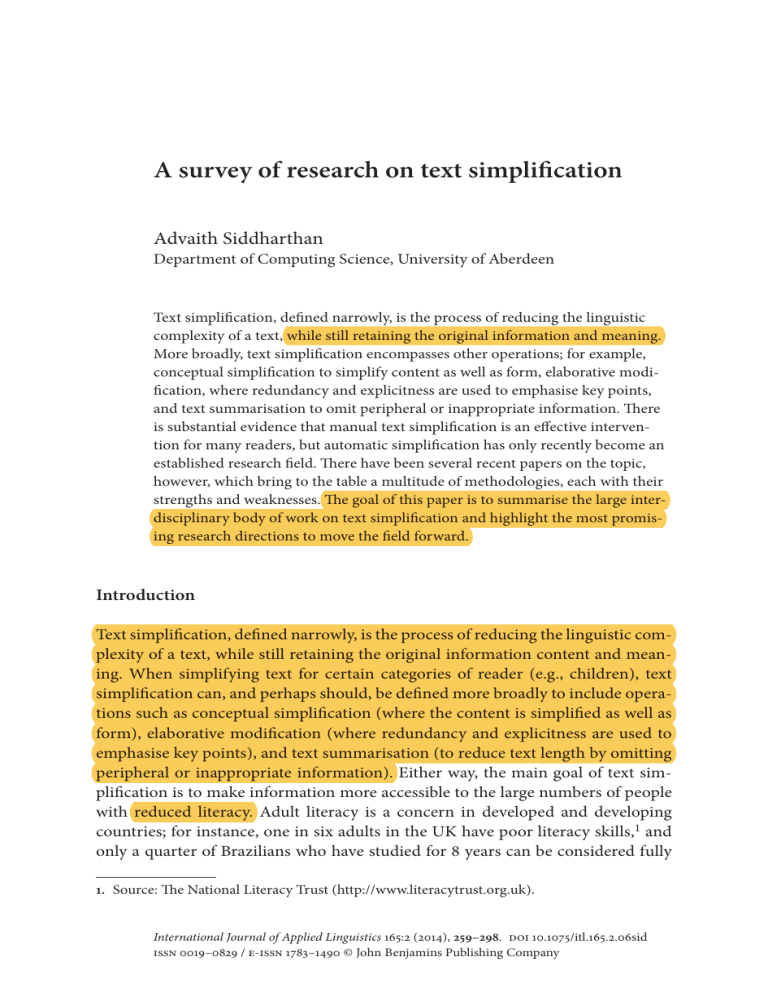

A survey on text simplification

Anuncio